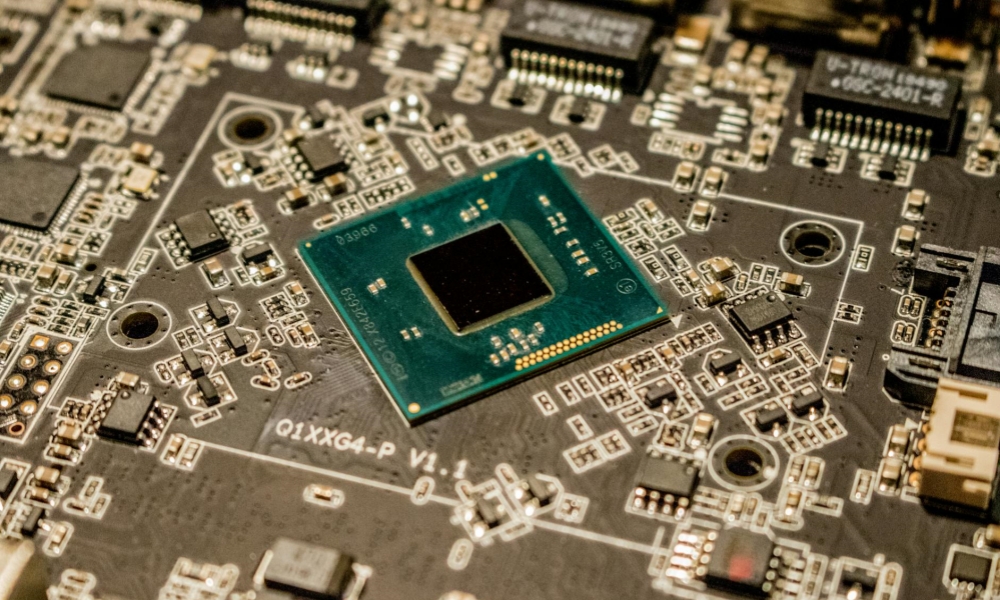

1. NVIDIA Tensor Core GPUs: NVIDIA’s Tensor Core GPUs are renowned for their exceptional performance in accelerating AI workloads and deep learning tasks. Equipped with specialized cores optimized for matrix multiplication operations essential for neural network computations, NVIDIA GPUs deliver unparalleled processing power for training and deploying AI models. With platforms like the NVIDIA Tesla V100 and NVIDIA A100, developers and researchers can leverage the parallel processing capabilities of Tensor Core GPUs to achieve breakthroughs in AI research, scientific computing, and data analytics with speed and efficiency.

2. Google Cloud TPUs: Google’s Cloud Tensor Processing Units (TPUs) are custom-built AI accelerators designed to handle demanding machine learning workloads in the cloud. Offering high-speed, low-latency processing for neural networks and large-scale data processing tasks, Google Cloud TPUs excel in optimizing AI model training and inference processes. With dedicated TPU Pods available on Google Cloud Platform, users can scale AI workloads seamlessly, accelerate model training times significantly, and achieve cost-effective performance for AI-driven applications and services.

3. Intel Movidius Neural Compute Stick: Intel’s Movidius Neural Compute Stick is a compact AI accelerator that enables edge computing and deep learning inference at the network edge. Designed for power-efficient AI processing on IoT devices, drones, and edge computing systems, the Movidius Neural Compute Stick offers real-time inference capabilities without relying on cloud connectivity. By leveraging the dedicated neural compute engine, developers can deploy AI models directly on edge devices, enabling intelligent decision-making, object recognition, and predictive analytics in resource-constrained environments.

4. AMD Radeon Instinct MI series: AMD’s Radeon Instinct MI series of GPUs are tailored for high-performance computing and AI workloads, offering advanced features for accelerating deep learning applications and scientific simulations. With support for HPC workloads, AI model training, and complex data processing tasks, AMD Radeon Instinct GPUs provide exceptional compute power, memory bandwidth, and scalability for demanding AI applications. By leveraging the parallel processing capabilities of Radeon Instinct GPUs, users can achieve optimal performance for AI-driven research, computational modeling, and data-intensive tasks requiring accelerated processing power.

These innovative platforms exemplify the cutting-edge performance and capabilities of AI chips, driving advancements in AI research, machine learning applications, and data processing tasks across diverse industries. By harnessing the power of specialized hardware like NVIDIA Tensor Core GPUs, Google Cloud TPUs, Intel Movidius Neural Compute Stick, and AMD Radeon Instinct GPUs, developers, researchers, and businesses can unlock new possibilities in AI innovation, accelerate AI-driven solutions, and push the boundaries of computational efficiency and performance in the era of artificial intelligence.